I’m a Member of Technical Staff at OpenAI working on monitoring LLM agents for misalignment. Previously, I worked on AI control and safety cases at the UK AI Security Institute and on honesty post-training at Anthropic. Before that, I did a PhD at the University of Sussex with Chris Buckley and Anil Seth focusing on RL from human feedback (RLHF) and spent time as a visiting researcher at NYU working with Ethan Perez, Sam Bowman and Kyunghyun Cho. I studied cognitive science, philosophy and physics at the University of Warsaw.

Highlighted papers

-

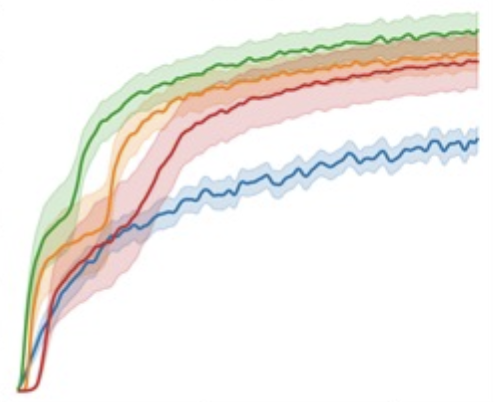

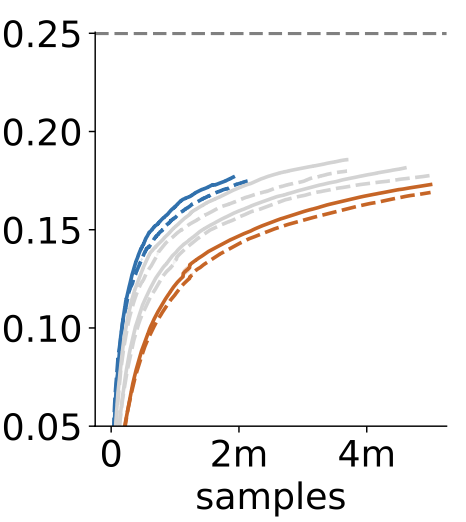

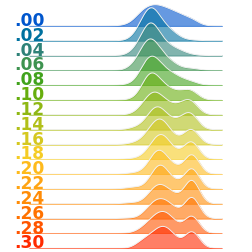

RL with KL penalties is better viewed as Bayesian inference

Findings of EMNLP 2022

-

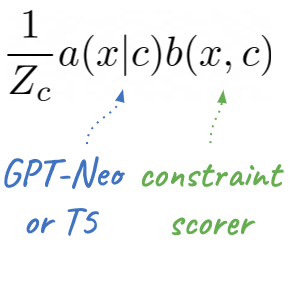

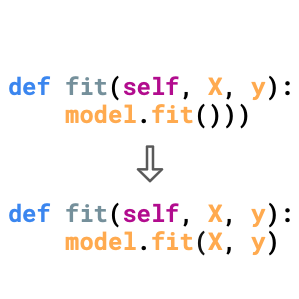

Energy-based models for code generation under compilability constraints

NLP4Programming workshop, ACL 2021

-

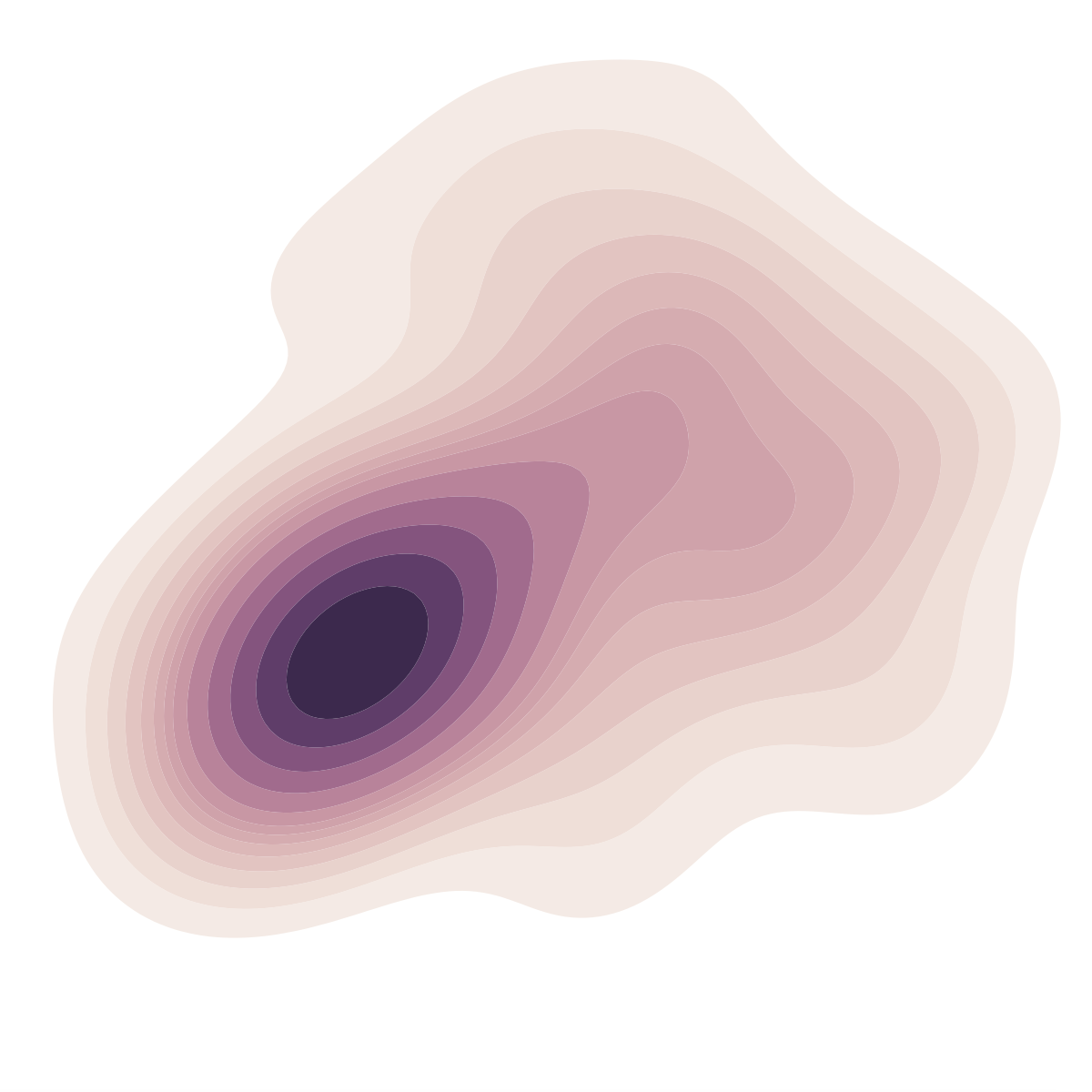

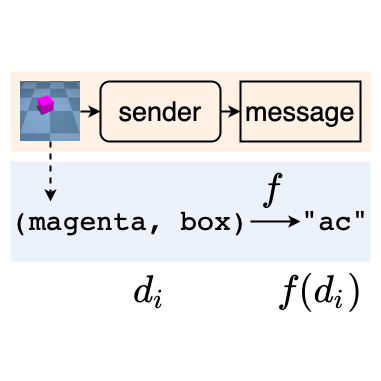

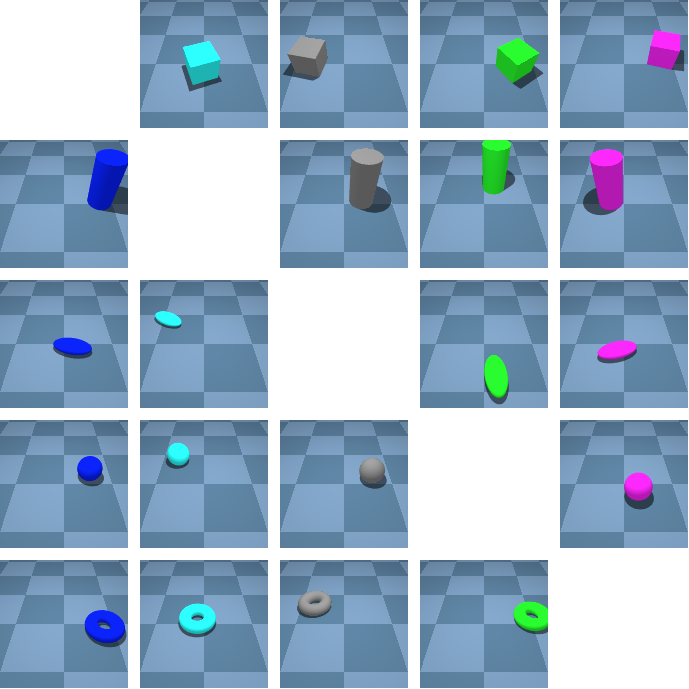

Measuring non-trivial compositionality in emergent communication

Emergent communication workshop, NeurIPS 2020

-

Developmentally motivated emergence of compositional communication via template transfer

Emergent communication workshop, NeurIPS 2019